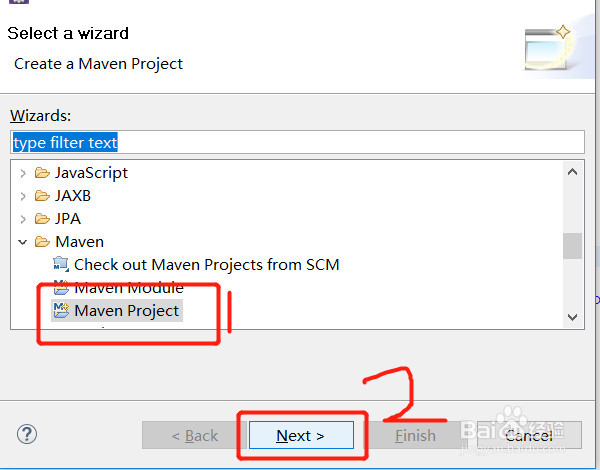

1、首先我们先创建一个maven项目,找到Mavne Project ,然后Next。

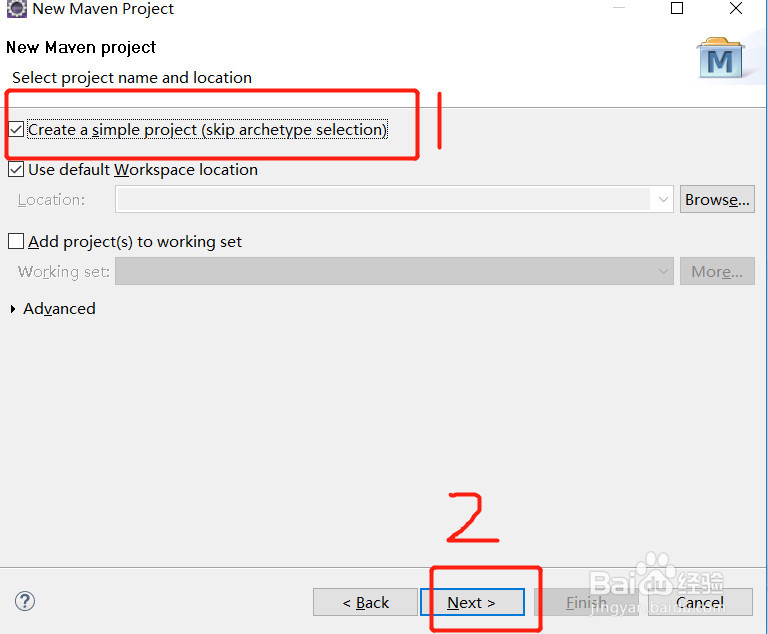

2、勾选Create a simple project (skip archetype selection) 然后Next。

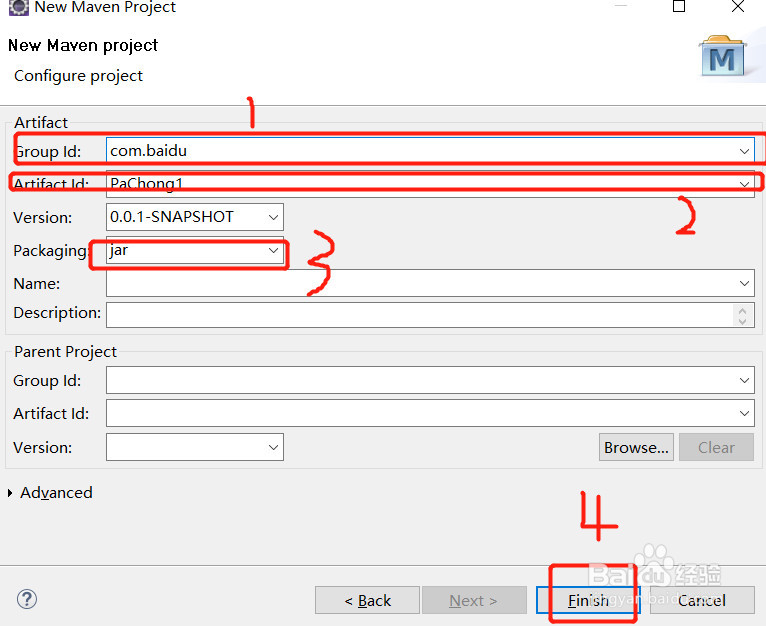

3、在Group框写域名,在ArtifactId里写项目名。 然后Finish。这个maven项目就创建完了。

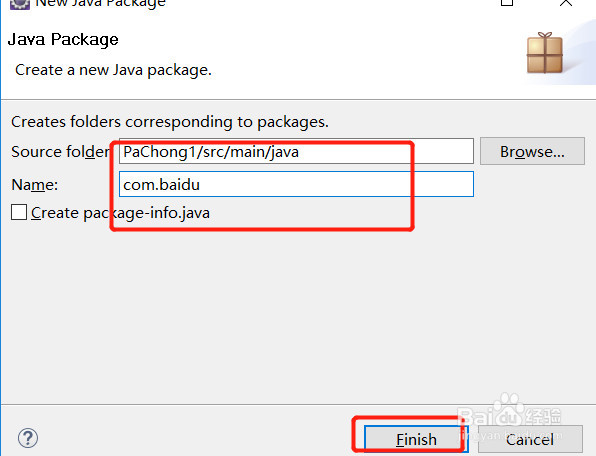

4、然后就是创建项目包了,如图:

5、构建pom.xml:<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.baidu</groupId> <artifactId>PaChong1</artifactId> <version>0.0.1-SNAPSHOT</version> <packaging>jar</packaging> <!-- 在maven项目(jar或war都可以)的pom.xml中加入如下代码,导入jar包,我用的是阿里云的镜像库 --> <dependencies> <dependency> <groupId>org.apache.httpcomponents</groupId> <artifactId>httpclient</artifactId> <version>4.3.1</version> </dependency> <!-- SLF4J --> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-api</artifactId> <version>1.7.21</version> </dependency> <!-- Logback --> <dependency> <groupId>ch.qos.logback</groupId> <artifactId>logback-core</artifactId> <version>1.1.3</version> </dependency> <dependency> <groupId>ch.qos.logback</groupId> <artifactId>logback-classic</artifactId> <version>1.1.3</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>log4j-over-slf4j</artifactId> <version>1.7.21</version> </dependency> <!-- https://mvnrepository.com/artifact/org.projectlombok/lombok --> <dependency> <groupId>org.projectlombok</groupId> <artifactId>lombok</artifactId> <version>1.16.20</version> </dependency> </dependencies></project>

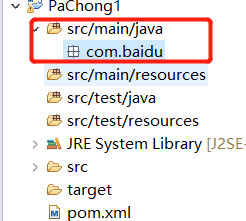

6、创建完毕,整个项目框架如图,然后在com.baidu里建方法类。

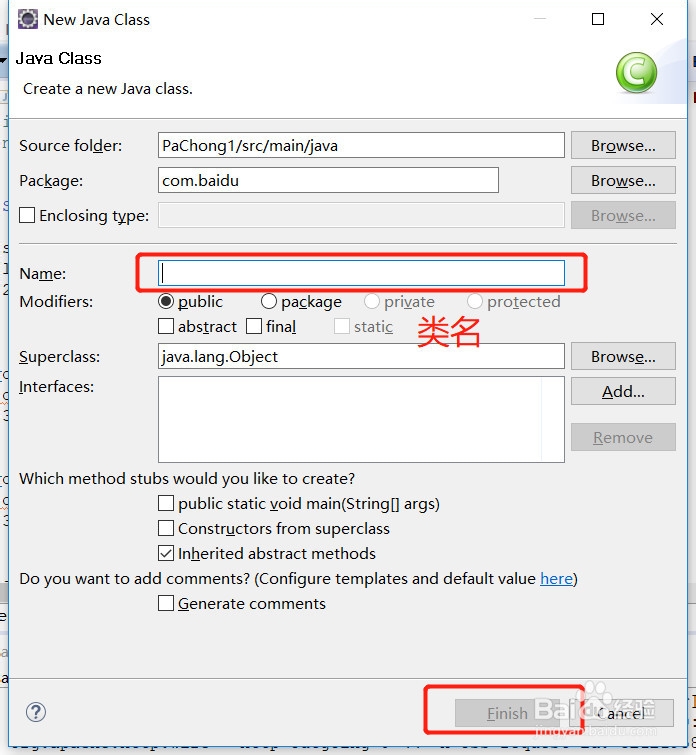

7、写类名,这个看个人爱好:

8、在类里写实现代码:package com.baidu;import java.io.BufferedReader;import java.io.IOException;import java.io.InputStreamReader;import org.apache.http.HttpEntity;import org.apache.http.HttpStatus;import org.apache.http.client.methods.CloseableHttpResponse;import org.apache.http.client.methods.HttpGet;import org.apache.http.impl.client.CloseableHttpClient;import org.apache.http.impl.client.HttpClients;public class HttpGetUtils { public static void main(String[] args) { // TODO Auto-generated method stub //https://v.qq.com/ http://www.youku.com/ http://m.sunlands.com String str=get("http://m.sunlands.com"); System.out.println(str); } private static String get(String url) { String result = ""; try { //获取httpclient实例 CloseableHttpClient httpclient = HttpClients.createDefault(); //获取方法实例。GET HttpGet httpGet = new HttpGet(url); //执行方法得到响应 CloseableHttpResponse response = httpclient.execute(httpGet); try { //如果正确执行而且返回值正确,即可解析 if (response != null && response.getStatusLine().getStatusCode() == HttpStatus.SC_OK) { System.out.println(response.getStatusLine()); HttpEntity entity = response.getEntity(); //从输入流中解析结果 result = readResponse(entity, "utf-8"); } } finally { httpclient.close(); response.close(); } }catch (Exception e){ e.printStackTrace(); } return result; } private static String readResponse(HttpEntity resEntity, String charset) { StringBuffer res = new StringBuffer(); BufferedReader reader = null; try { if (resEntity == null) { return null; } reader = new BufferedReader(new InputStreamReader( resEntity.getContent(), charset)); String line = null; while ((line = reader.readLine()) != null) { res.append(line); } } catch (Exception e) { e.printStackTrace(); } finally { try { if (reader != null) { reader.close(); } } catch (IOException e) { } } return res.toString(); }}

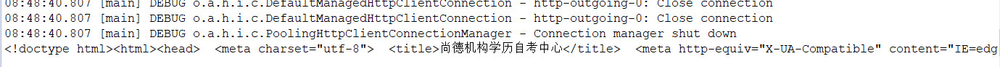

9、运行出现这个就说明我们成功了。